A new report by Mathematica Policy Research shows that students’ scores on the existing high school assessment in Massachusetts predict college performance as well as scores on a new test that was recently developed by a consortium of states to align with Common Core standards. These findings will inform state policymakers as they consider switching to the Partnership for Assessment of Readiness for College and Careers (PARCC) assessments, which were administered in 11 states and the District of Columbia last year, or staying with the Massachusetts Comprehensive Assessment System (MCAS) to test student achievement in public schools.

Commissioned by the Massachusetts Executive Office of Education, Mathematica’s study is the first to rigorously analyze the extent to which PARCC assessments identify students who are ready for college, one of the test’s major goals.Key findings from the report include:

- PARCC and MCAS scores have a similar ability to predict college grades, comparable to the predictive ability of SAT scores.

- Although the scores are equally predictive, the two assessments differ in the degree to which their designated performance standards predict college grades and the need for remedial math, with PARCC outperforming MCAS.

Forty-eight percent of students meeting PARCC’s “college ready” standard in math have an average GPA equal to a “B” or higher in their first year of college. However, only 24 percent of students who meet the MCAS “proficient” standard perform at this level in college. In addition, PARCC’s college ready students were 11 percentage points less likely to have been assigned to remedial classes than the MCAS’s proficient students. In English language arts, the two standards are not statistically distinguishable.

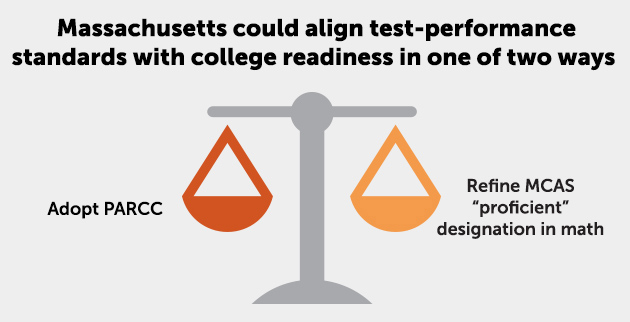

Ira Nichols-Barrer, the lead author of Mathematica’s report, said, “Our study provides immediate evidence that both PARCC and MCAS scores do equally well in predicting college success. But there are meaningful differences between the performance standards used by each test, raising important questions for Massachusetts policymakers. To align math performance standards with college readiness, the state could either adopt the PARCC exam or create a higher math standard on the MCAS exam. This study and the state’s eventual decision will provide models for other states weighing whether to keep or reform their statewide educational assessments.”

The national implications of these findings are highlighted in a Mathematica podcast discussion with education policy experts Brian Gill and Ira Nichols-Barrer (below).